September 2, 2025

Lost in Translation? Vocabulary Alignment for Source-Free Adaptation in Open-Vocabulary Semantic Segmentation

VocAlign: A framework for source-free domain adaptation in open-vocabulary segmentation

We focus on foundational academic AI research. We publish in open venues, share our code, and push the field forward.

How can AI agents adapt to ever-changing environments? By seamlessly integrating advanced perception, Q&A, and control strategies — alongside techniques like test-time training, model merging, and on-the-fly knowledge updates — we aim to develop robust, lifelong-learning systems that continuously refine their capabilities in response to new rules, tasks, and scenarios.

Text-to-image diffusion models excel at generating high-quality visuals, but reconstructing entire scenarios is challenging due to greater spatial complexity. In this project, we propose a pipeline that synthesizes realistic 3D environments from minimal input, combining texturing and semantic preservation with pathfinding and 3D Gaussian Splatting.

Latest papers from the lab, with full details on each blog post.

September 2, 2025

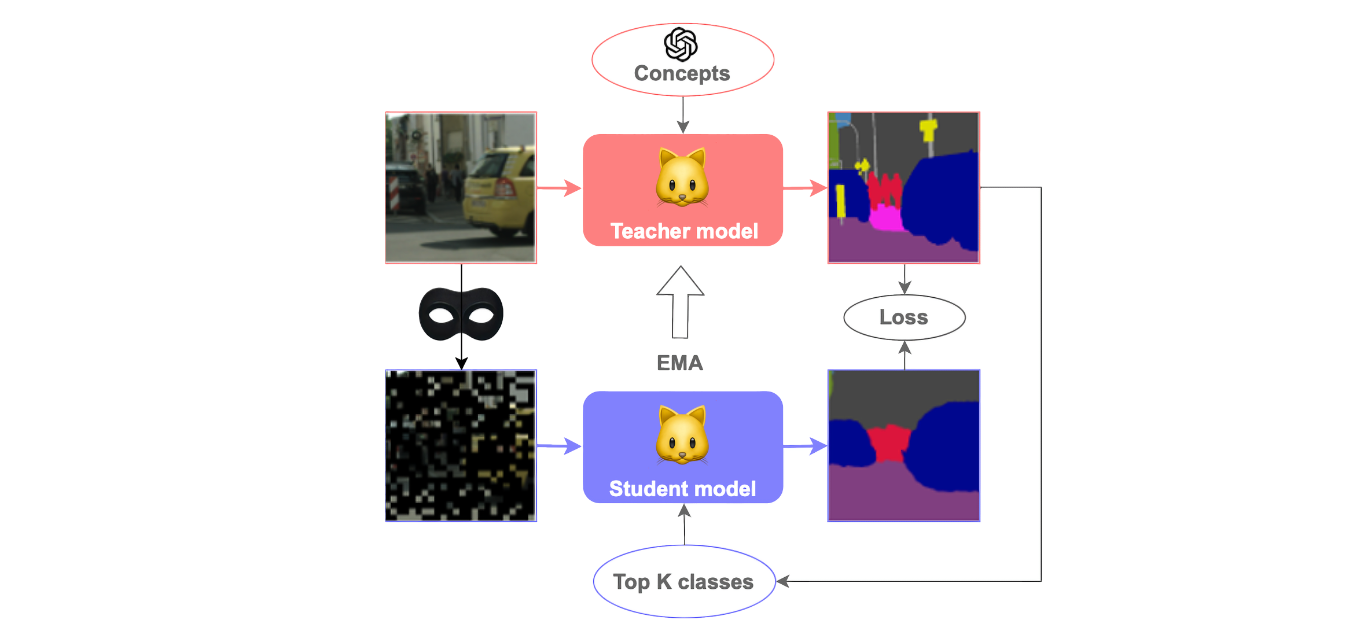

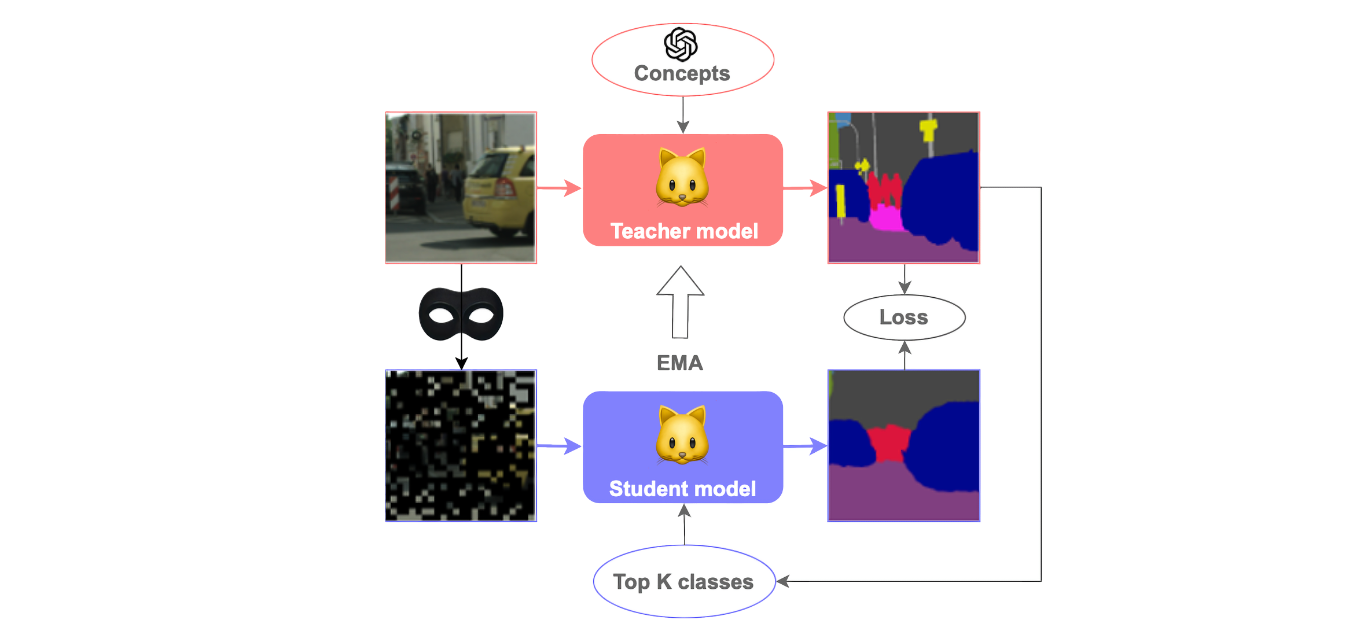

VocAlign: A framework for source-free domain adaptation in open-vocabulary segmentation

March 20, 2025

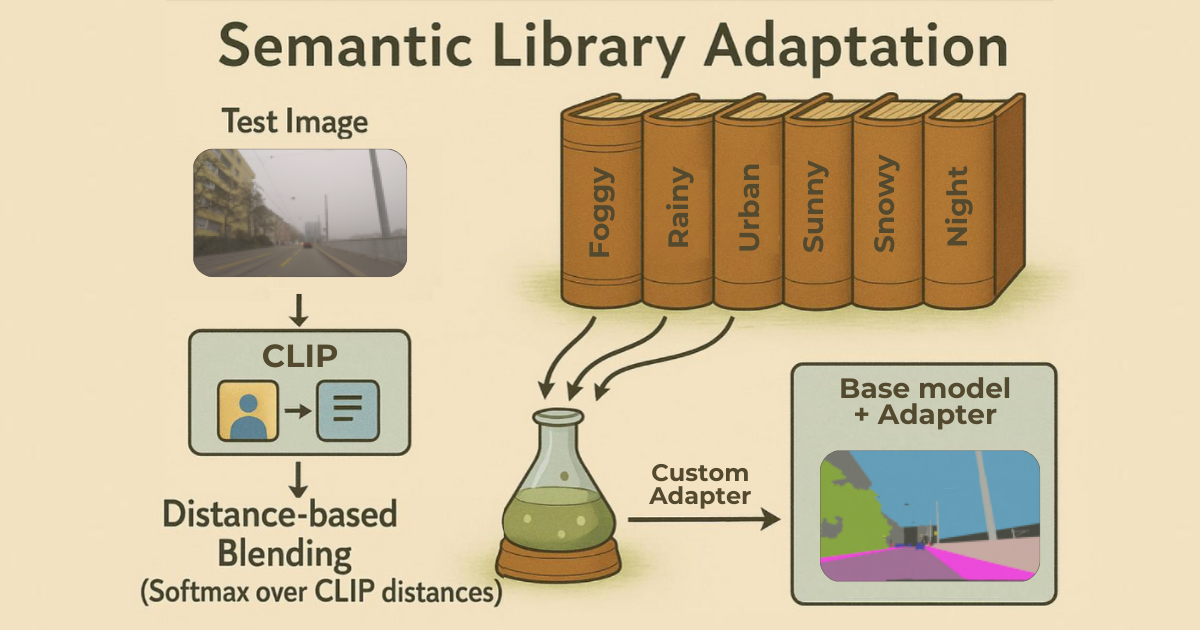

An approach to retrieve and merge LoRA adpapters for new domains

Explore our internship and thesis tracks, learn about current focus areas, and see how we support publications at top-tier AI conferences.

View Student Opportunities

ICCV • 2023

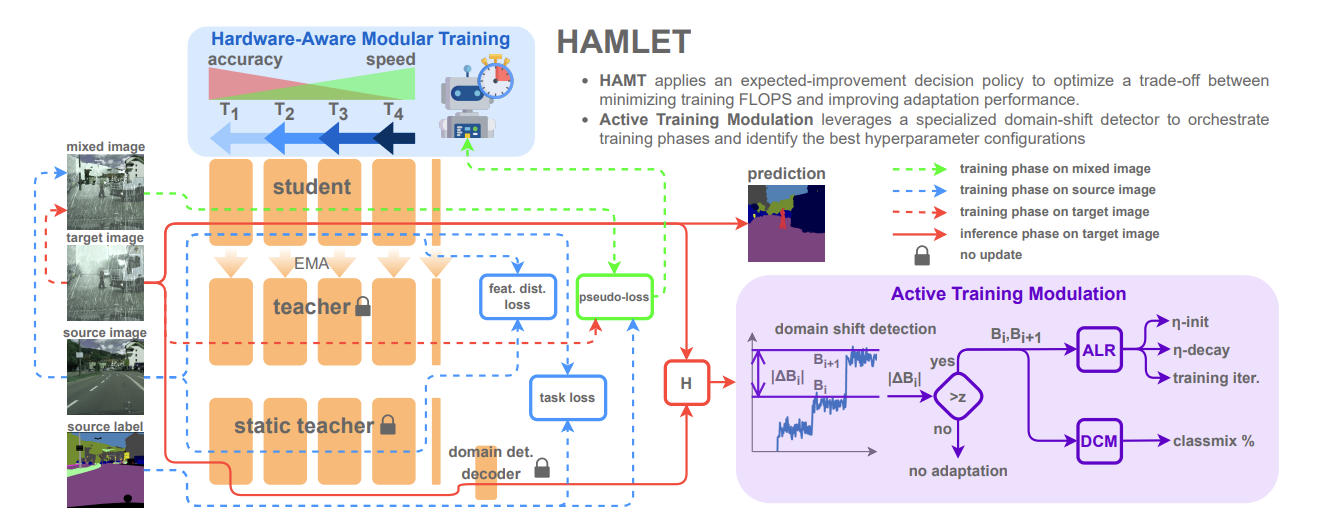

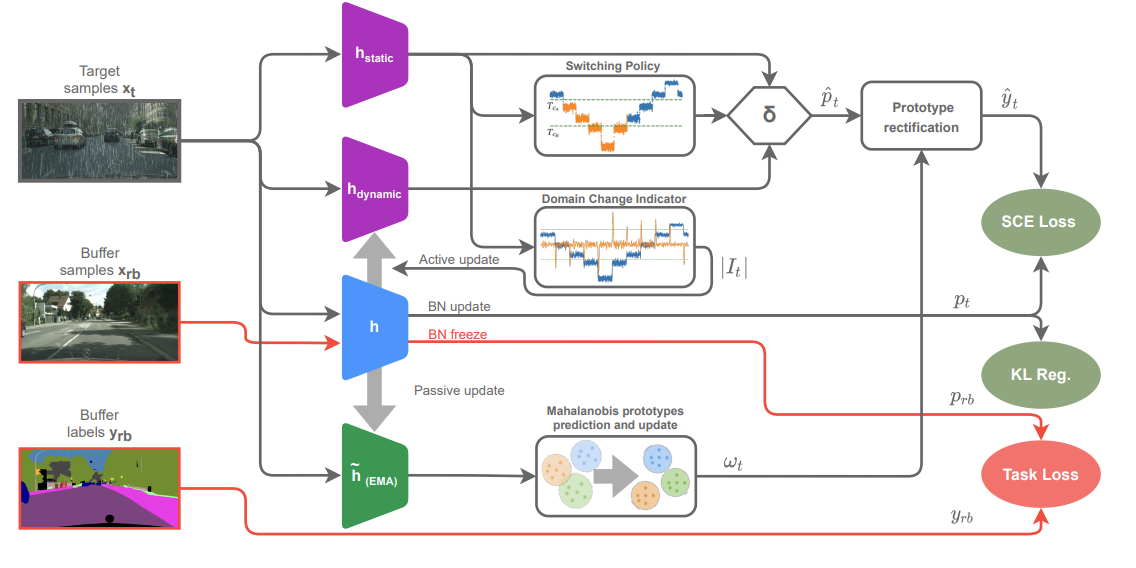

The paper explores real-time adaptation strategies for semantic segmentation models, discussing the trade-offs between adaptation and performance in dynamic environments.

ECCV • 2022

This work introduces a framework for online domain adaptation in semantic segmentation, allowing models to adapt to continuously changing environments without offline retraining.

ICRA • 2020

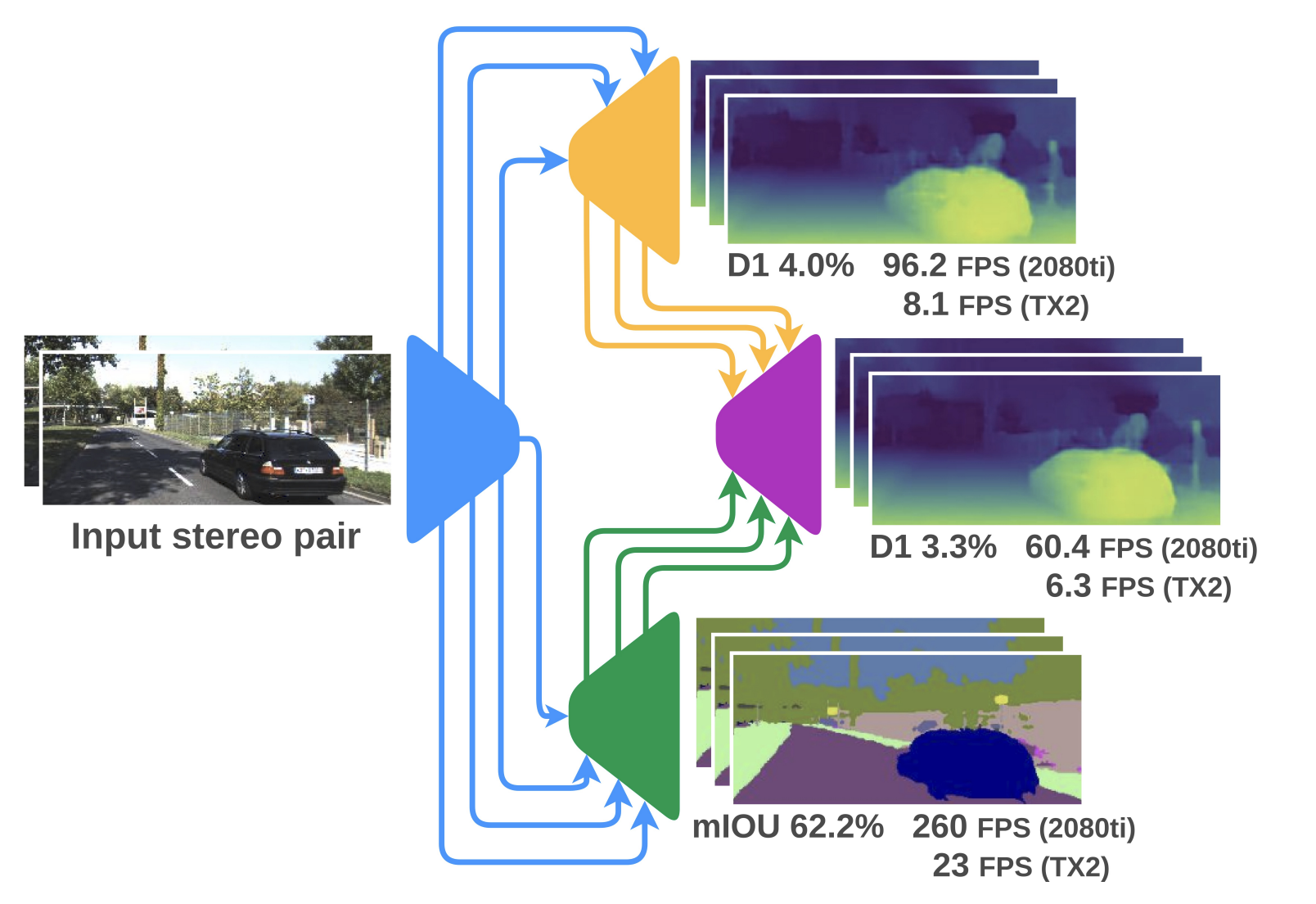

This paper presents a compact and lightweight architecture for real-time semantic stereo matching, enabling efficient inference on embedded devices with minimal accuracy loss.

3DV • 2019

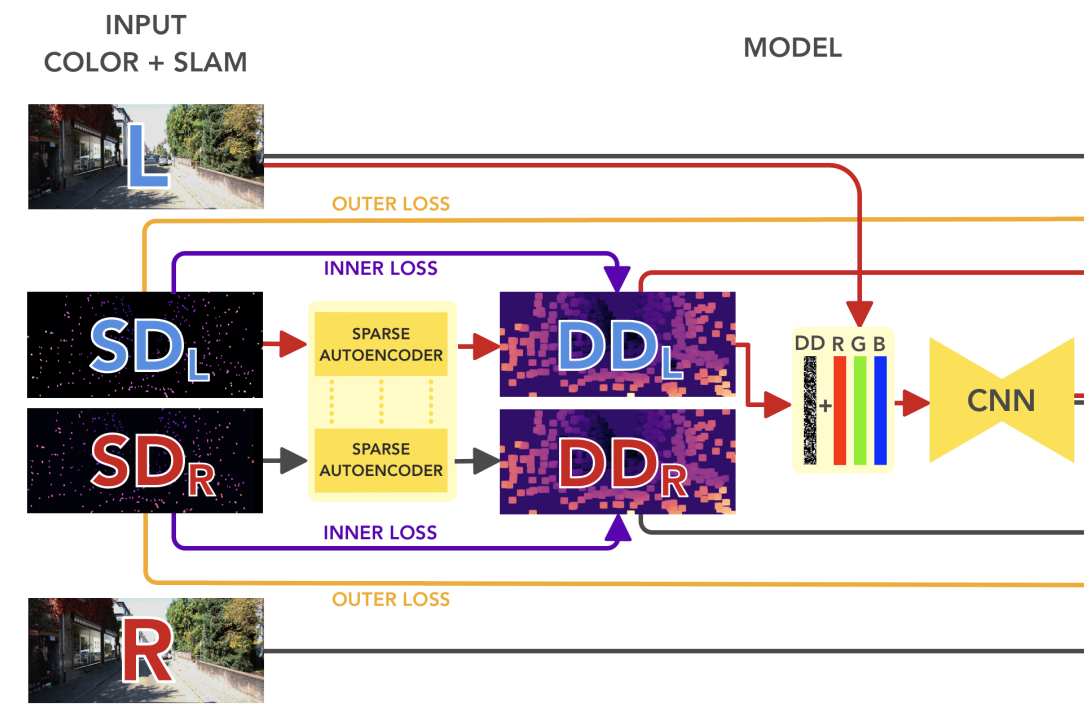

The authors propose a method to improve self-supervised monocular depth estimation by integrating traditional visual odometry techniques, achieving better accuracy in depth prediction.